Broadcom (AVGO.US) revives “AI faith” on its own! ASIC demand is growing at a blowout with the help of DeepSeek

The Zhitong Finance App learned that Broadcom (AVGO.US), one of the biggest winners of the global AI craze, announced financial results for the first quarter of the 2025 fiscal year ending February 2 on the morning of March 7, Beijing time. Broadcom is one of the core chip suppliers for Apple (AAPL.US) and other major technology companies. It is also the core supplier of Ethernet switch chips for the world's largest AI data centers, as well as AI ASIC, a customized AI chip that is essential for AI training/inference. Broadcom's stock price once surged nearly 20% in post-market trading of US stocks, mainly due to the chip company's strong performance growth and the release of optimistic performance forecasts, proving to investors that tech giants such as Google, Meta, and AI leaders such as OpenAI still maintain a strong momentum in the field of artificial intelligence computing power.

Broadcom's strong financial report and outlook can be described as reviving the belief of US technology investors in artificial intelligence and driving the collective upward trend of US stocks after the market. Even the “AI chip hegemon” Nvidia (NVDA.US) has not been able to do this before. The mixed results of CRM.US (CRM.US), MRVL.US (MRVL.US), and Nvidia have caused some investors who are cautious about “artificial intelligence monetization and monetization paths” to drastically sell popular technology stocks — they generally believe that the AI investment frenzy has caused a bubble in technology stocks, compounded by rising market expectations that the US economy will fall into “stagflation” under Trump's “tariff storm,” causing US technology stocks to continue to fall since the end of February.

However, the strong performance and optimistic outlook of GM, the strongest power in the AI ASIC field, tells investors that demand for AI computing power is still exploding under the “extremely low-cost AI big model computing power paradigm” led by DeepSeek, and that the so-called “excess AI computing power” is a market concern. In particular, as DeepSeek completely sets off an “efficiency revolution” at the level of AI training and inference, and pushes future AI model development to focus on “low cost” and “high performance,” AI ASICs are entering a stronger demand expansion trajectory than the 2023-2024 AI boom period against the backdrop of a sharp increase in demand for AI inference in the cloud. In the future, major customers such as Google, OpenAI, and Meta are expected to continue to invest heavily in developing AI ASIC chips with Broadcom.

Broadcom said in its performance outlook announced on Thursday EST that sales are expected to reach about 14.9 billion US dollars for the three-month period ending May 4, exceeding analysts' average expectations of 14.6 billion US dollars. This average forecast can be described as continuing to rise since this year, highlighting the continued rise in demand from major companies, especially big tech giants such as Google and Meta, for Broadcom's Ethernet switch chips and AI ASIC chips. However, the forecast given by Broadcom falls slightly short of the highest estimate of 15.1 billion US dollars given by some analysts. The revenue for the first fiscal quarter and earnings per share both exceeded analysts' average expectations that have continued to rise since this year.

Overall, Broadcom's performance outlook shows that the historic global AI computing power deployment and AI spending boom continues. As the core beneficiary group of this unprecedented wave, Broadcom's huge data center customers continue to invest in new infrastructure construction. It is worth noting that the AI boom once drove the chip company's market capitalization to surpass trillion US dollars, but in 2025, as US technology investors generally turned cautious due to Trump's heavy tariff pressure and concerns about excessive computing power, they are actively seeking evidence that the artificial intelligence boom continues, and Broadcom's performance just now proves that the boom is still going on.

As demand for Broadcom Ethernet switch chips and AI ASIC chips continues to skyrocket, Wall Street is generally bullish on Broadcom's stock price outlook. J.P. Morgan analyst Harlan Sur recently stated in a research report that due to demand for AI computing power and energy efficiency, giants such as Google, Microsoft, Meta, and Amazon are using AI ASICs on an increasingly large scale, so Broadcom, which has huge business exposure, is likely to become a core beneficiary.

J.P. Morgan gave Broadcom an “gain” rating and set its target share price of $250 within 12 months. In contrast, Broadcom's stock price closed around $179 on Thursday. On the day before Broadcom announced its earnings report, Evercore ISI, another well-known Wall Street investment agency, raised Broadcom's target share price from $250 to $267.

Demand for AI ASICs from “hyperscale customers” continues to be strong

Broadcom's stock price once surged nearly 20% in after-market trading of US stocks. The stock previously closed at $179.45 during regular trading hours, and the cumulative decline since 2025 has reached 23%. Broadcom CEO Hock Tan (Hock Tan) pointed out during the performance conference that spending related to artificial intelligence was a key driver of growth in the first fiscal quarter ending February 2. He revealed that sales in the field of artificial intelligence are expected to reach 4.4 billion US dollars this quarter. According to performance data, Broadcom's AI-related revenue in the first fiscal quarter soared 77% year over year to reach US$4.1 billion, mainly due to the company's increasingly strong adoption rate of customized AI accelerators — AI ASIC chips.

Prior to the release of this financial report, Broadcom's biggest competitor in the AI ASIC field, the results announced on Wednesday by Maywell Technology were not recognized by the market. Despite the company's recent quarterly revenue growth of 27% and forecasting accelerated growth this quarter, investors believed the growth rate fell short of expectations, causing its stock price to plummet 20% on Thursday.

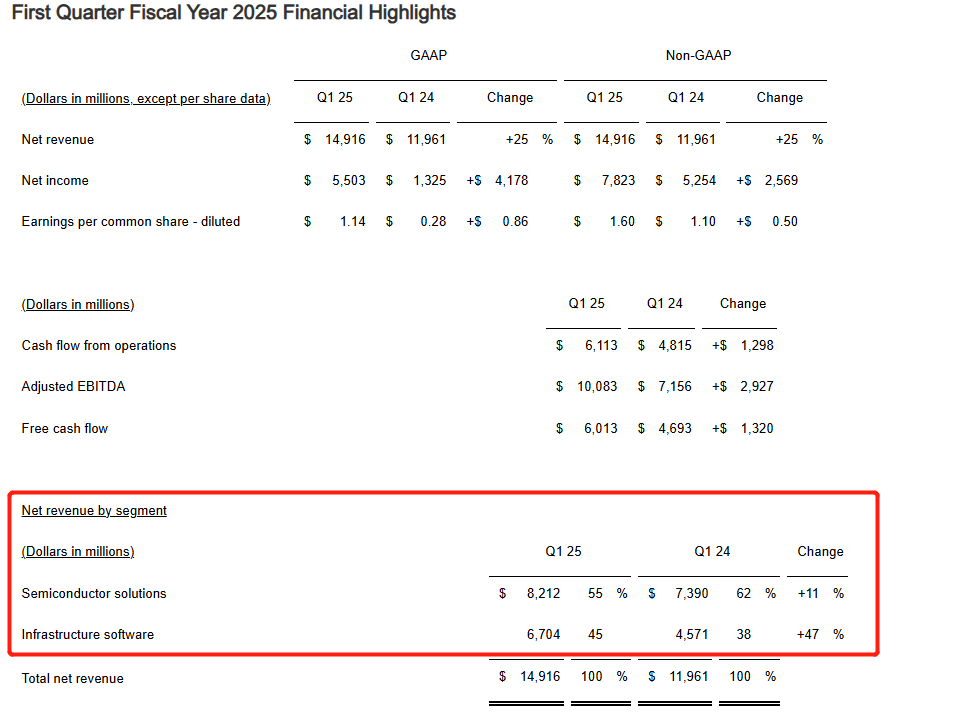

Results for the first fiscal quarter ended February 2 showed that after excluding special projects, Broadcom's earnings per share for the first fiscal quarter were $1.60, and revenue increased 25% year-on-year to US$14.92 billion. According to data compiled by Bloomberg, analysts had previously estimated earnings of 1.50 US dollars per share and revenue of 14.6 billion US dollars. Broadcom's actual data all exceeded expectations.

In terms of other performance indicators, Broadcom's semiconductor business revenue for the first fiscal quarter reached US$8.2 billion, up 11% year on year, higher than analysts' expectations; operating profit for the first fiscal quarter reached US$6.260 billion, surging 200% year on year; under non-GAAP standards, Broadcom's adjusted EBITDA profit for the first fiscal quarter was about US$10.083 billion, up 41% year on year, and non-GAAP net profit was about US$7.823 billion, up 49% year on year.

Although Broadcom produces various types of chips, including iPhone core connectivity components and network technology, investors have recently paid more attention to its customized chip business, or AI ASIC business. This business unit helps large data center customers such as Google, Meta, and OpenAI develop specialized chips that can create and run artificial intelligence application software. Furthermore, following the successful acquisition of VMware, the company is now an important supplier of enterprise-level business management and operation software and networking software.

In a conference call with analysts, CEO Chen Fuyang said that Broadcom is speeding up the provision of AI ASIC chips to “hyperscale customers” — that is, operators with large-scale data centers such as Meta, Google, and OpenAI, as well as technology giants such as Apple. At the performance conference, he pointed out that in some AI application scenarios, Broadcom's customized semiconductors have more performance advantages than the Blackwell or Hopper architecture AI GPUs sold by Nvidia.

Chen Fuyang made a big statement at the performance conference that the company is actively expanding a new “hyperscale customer” group. There are currently three such customers, and four others are in the process of cooperating. Two of them are about to become super revenue generating customers. “Our hyperscale partners are still actively investing,” he stressed. Chen Fuyang also expects to complete the streaming of custom processors (XPUs) for two very large customers this year.

It is worth noting that the revenue related to Broadcom's potential new hyperscale customers mentioned above has not been reflected in the company's current AI revenue forecast (estimated to be in the range of 60 to 90 billion US dollars in 2027). This means that Broadcom's market opportunities may break through the market's current framework of expectations.

At the last performance conference, Broadcom management expected the potential market size of AI components (Ethernet chips+AI ASICs) built for global data center operators to reach 60 to 90 billion US dollars by the 2027 fiscal year. Chen Fuyang emphasized in a conference call at the time: “In the next three years, the opportunities related to AI chips will be extremely vast.” “The company is cooperating with large cloud computing customers to develop customized AI chips. We currently have three hyperscale cloud customers. They have developed their own multi-generation 'AI XPU' roadmap and plan to deploy at different speeds over the next three years. We believe they are each planning to deploy 1 million XPU clusters on a single architecture by 2027.” XPU here refers to a “highly scalable” processor architecture, usually referring to AI ASICs, FPGAs, and other customized AI accelerator hardware other than Nvidia AI GPUs.

Broadcom Ethernet switch chips are mainly used in data centers and server cluster equipment, and are responsible for processing and transmitting data streams efficiently and at high speed. Broadcom Ethernet chips are essential for building AI hardware infrastructure because they can ensure high-speed data transmission between GPU processors, storage systems, and networks, and this is extremely important for generative AI such as ChatGPT, especially for applications that require processing large amounts of data input and real-time processing capabilities, such as Dall-E Wensheng maps and Sora Wensheng video models.

More importantly, with absolute technological leadership in the field of inter-chip interconnection communication and high-speed data transmission between chips, in recent years, Broadcom is currently the most important participant in ASIC customized chips in the AI field, such as Google's self-developed server AI chip - TPU AI accelerator chip. Broadcom is the core participant. Broadcom and the Google team participated in the development of TPU AI acceleration chips. In addition to chip design, Broadcom also provided Google with key intellectual property rights for inter-chip interconnection communication, and was responsible for manufacturing, testing, and packaging new chips, thus protecting Google's expansion of new AI data centers.

Through a series of major acquisitions, Chen Fuyang has built one of the most valuable companies in the chip industry. Its software business unit, which was reorganized after the acquisition of VMware, is about to be comparable in size to the semiconductor business. This broad layout makes Broadcom's forecast outlook a weather vane for the needs of the entire technology industry. According to financial reports, Broadcom's semiconductor business division had quarterly revenue of US$8.21 billion, up 11% year on year; the software division's revenue was 6.7 billion US dollars, both of which exceeded expectations.

The “AI ASIC Super Wave” led by Google and Meta is coming

Strong demand for Ethernet switch chips and AI ASIC chips closely related to AI training/inference systems can be clearly seen in Broadcom's strong revenue data, which continued to exceed expectations at the beginning of FY2024 and FY2025. In particular, customized AI ASIC chips have become an increasingly important source of revenue for Broadcom. With strong demand for Broadcom Ethernet switch chips in major data centers around the world and absolute technological leadership in the field of inter-chip interconnection communication and high-speed data transmission between chips, Broadcom has been the most important participant in the field of AI chips in recent years.

Based on Broadcom's unique chip-to-chip communication technology and many patents for data transmission, Broadcom has now become the most important participant in the AI ASIC chip S market in the AI hardware field. Not only does Google continue to choose to cooperate with Broadcom to design and develop customized AI ASIC chips, giants such as Apple and Meta, and more data center service operators are expected to join forces with Broadcom for a long time to build high-performance AI ASICs in the future.

With the sharp drop in AI training costs led by DeepSeek and the sharp drop in inference token costs, AI agents and generative AI software are expected to accelerate penetration into all walks of life. Judging from the responses of Western tech giants such as Microsoft, Meta, and Asmack, they all admired DeepSeek's innovation, but this did not shake their determination to invest in AI on a large scale. They believe that the new technology route led by DeepSeek is expected to bring about an overall decline in AI costs. In particular, for AI applications where the market size will become larger, there will inevitably be a much larger demand for cloud AI inference computing power.

Microsoft CEO Nadella previously mentioned the “Jevans Paradox” — when technological innovation dramatically increases efficiency, resource consumption not only does not decrease, but instead surges. Transferring to the field of artificial intelligence computing power is an unprecedented demand for AI inference computing power brought about by the surge in the scale of AI model applications. For example, after connecting DeepSeek to WeChat, it is common to see that DeepSeek's deep thinking is unable to respond to customer needs, verifying that the current AI computing power infrastructure construction is far from meeting AI computing power requirements.

Morgan Stanley recently pointed out in a research report that US tech giants are expected to invest 500 billion US dollars in the next 4 years, and the “Stargate” project plans to invest 500 billion US dollars over the next 4 years, of which 100 billion US dollars will be deployed, so demand for Nvidia AI GPUs and AI ASICs introduced by ASIC manufacturers is still very strong. Coupled with the rapid penetration of the DeepSeek model into all walks of life in China, the vast demand for AI inference will ignite a new wave of demand in the chip AI industry chain.

Damo raised the overall spending forecast for major North American cloud computing companies in 2025. The growth rate was raised to 32% from the previous 29% year-on-year forecast. In other words, the capital expenditure of the top ten cloud service giants in North America is expected to reach 350 billion US dollars in 2025, mainly based on the sharp expansion trend in cloud AI computing power demand.

As US tech giants insist on investing heavily in the field of artificial intelligence, AI ASIC giants such as Broadcom, Mywell Technology, and Shixin from Taiwan may benefit the most. Microsoft, Amazon, Google, Meta, and even generative AI leader OpenAI are all teaming up with Broadcom or other ASCI giants to update iterative AI ASIC chips for massive inference side AI computing power deployment. Therefore, the future market share expansion trend of AI ASICs is expected to be much stronger than AI GPUs, and will tend to have equal shares, rather than the current situation where AI GPUs are alone — accounting for up to 90% of the AI chip field.

However, this transformation did not happen overnight. Currently, AGI is still in the development process, and the flexibility and versatility of AI GPUs is still the most important exclusive ability for AI training. Large-scale AI models, such as the GPT family and the Liama open source family, still require high operator flexibility and network structure variability during the “research and exploration” or “rapid iteration” stage — this is the main reason why general-purpose GPUs still have an advantage.

In the Google and Meta performance conference call, Pichay and Zuckerberg both said they will step up efforts to launch self-developed AI ASICs with chip manufacturer Broadcom. The AI ASIC technology partners of the two giants are Broadcom, a leader in customized chips. For example, the TPU (Tensor Processing Unit) created by Google and Broadcom is the most typical AI ASIC. Meta and Broadcom have previously co-designed Meta's first-generation and second-generation AI training/inference acceleration processors. Meta and Broadcom are expected to accelerate development of Meta's next-generation AI chip, MTIA 3, in 2025. OpenAI, which received huge investment and deep cooperation from Microsoft, said in October last year that it would work with Broadcom to develop OpenAI's first AI ASIC chip.

As large model architectures gradually converge to several mature paradigms (such as standardized Transformer decoders and Diffusion model pipelines), ASIC can more easily absorb the computing power load of mainstream inference terminals. Furthermore, some cloud service providers or industry giants will deeply couple software stacks, make ASIC compatible with common network operators, and provide excellent developer tools, which will accelerate the spread of ASIC inference in normalized/mass scenarios.

Looking ahead to future computing power prospects, Nvidia AI GPUs may focus more on large-scale cutting-edge exploratory training, rapid testing of rapidly changing multi-modals or new structures, and general computing power such as HPC, graphic rendering, and visual analysis. AI ASICs focus on deep learning specific operators/data streams for ultimate optimization, that is, they are good at stable structural reasoning, high batch throughput, and high energy efficiency. For example, if AI workloads on a cloud platform make extensive use of common operators in CNN/Transformers (such as matrix multiplication, convolution, LayerNorm, Attention, etc.), most AI ASICs will deeply customize these operators; image recognition (ResNet series, ViT), Transformer-based automatic speech recognition (Transformer ASR), Transformer Decoder-only, and some multi-modal logistics Once the line is fixed, it can be extremely optimized based on ASIC.

As predicted by Damo in the research report, in the long run, the two will coexist harmoniously, and the AI ASIC market share is expected to expand significantly in the medium term. Nvidia's general-purpose GPUs will focus on complex and variable scenarios and cutting-edge research, while ASIC will focus on high-frequency stability, large-scale AI inference loads, and some mature and stable curing training processes.

Index Options

Index Options State Street

State Street CME Group

CME Group Nasdaq

Nasdaq Cboe

Cboe TradingView

TradingView Wall Street Journal

Wall Street Journal